Building a Real-Time Data Pipeline Using Python, MySQL, Kafka, and ClickHouse

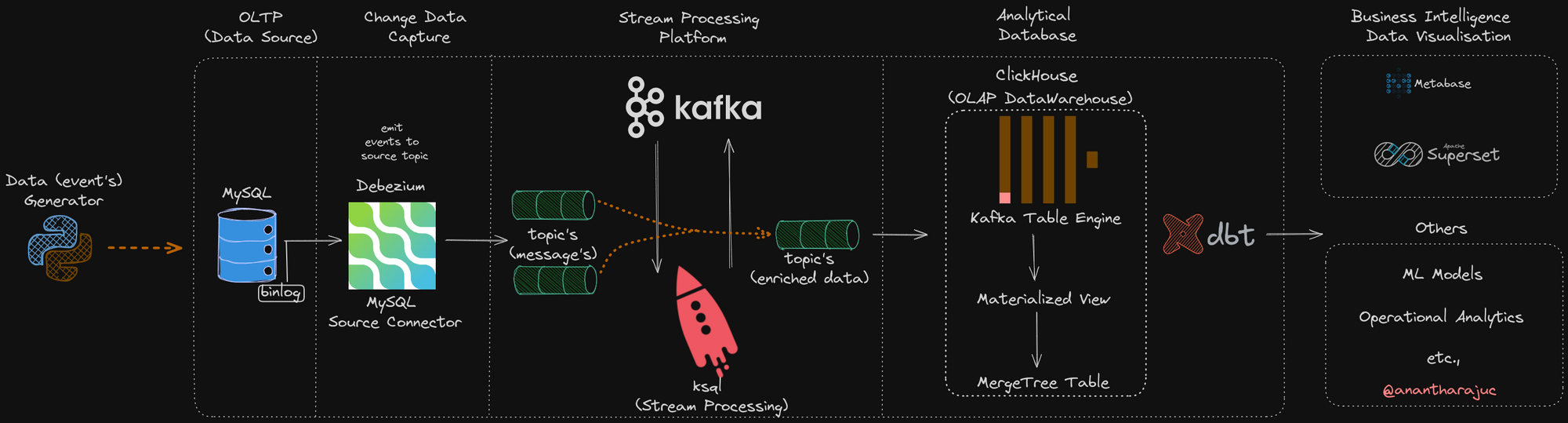

In the modern data-driven world, real-time data processing and analytics are critical for making timely and informed decisions. This post explores a data pipeline architecture for real-time data streaming, processing, and visualization using popular open-source tools like Python, MySQL, Kafka, and ClickHouse.

Code and Documentation

The complete code for this data pipeline, along with detailed documentation of all steps, is available on GitHub.

Key Features of the GitHub Project

- Step-by-Step Documentation: The GitHub repository includes detailed instructions for setting up and running the entire pipeline. This makes it easy for users to replicate the setup and understand each component's role.

- Sample Data Generator: Python scripts to simulate real-time data generation are provided, helping users to test and experiment with the pipeline.

- Configuration Files: Pre-configured settings for MySQL, Debezium, Kafka, and ClickHouse are included to streamline the setup process.

- Stream Processing with ksql: Examples of how to use ksql for real-time data enrichment and transformation are provided, showcasing the power of stream processing.

- Integration with BI Tools: Guidance on how to connect Metabase and Apache Superset to the data pipeline for visualization and reporting is available.

By following the instructions and leveraging the provided code, users can quickly set up a robust and scalable real-time data pipeline tailored to their specific needs.

Data Generation

The journey begins with the Data Generator. In our example, Python is used to simulate and generate event data. This could be any real-time data source, such as IoT devices, transaction logs, or user interactions on a website.

OLTP (Online Transaction Processing) Data Source

The generated data is initially stored in a MySQL database. MySQL serves as the OLTP data source, managing high transaction volumes and maintaining data integrity. It logs changes in real-time using a binlog (binary log), which captures all updates made to the database.

Change Data Capture (CDC) with Debezium

To stream changes from the MySQL database in real-time, Debezium is employed. Debezium is a distributed platform that captures row-level changes in databases. It acts as a MySQL Source Connector, monitoring the MySQL binlog for data changes and converting these changes into events.

Stream Processing Platform with Kafka

These change events are then sent to Kafka, a distributed streaming platform capable of handling large volumes of data with low latency. Kafka organizes these events into topics. Each topic is a log of messages, which can be processed in real-time.

To enrich and process these messages further, a stream processing tool like ksql (Kafka Stream Processing) can be used. ksql enables real-time data transformations and filtering directly on Kafka topics.

Analytical Database with ClickHouse

For analytical processing, the data is transferred from Kafka to ClickHouse, an OLAP (Online Analytical Processing) data warehouse known for its high performance and efficiency in handling analytical queries. ClickHouse consumes Kafka topics through its Kafka Table Engine. The data is then transformed into Materialized Views and stored in MergeTree Tables, optimized for fast query performance.

Business Intelligence and Data Visualization

Finally, the processed and aggregated data is ready for visualization and analysis. Tools like Metabase and Apache Superset can connect to ClickHouse, providing interactive dashboards and reports. Additionally, tools like dbt (data build tool) can be used for data transformation and modeling, enabling more advanced analytics and insights.

Other applications of this data pipeline include feeding into ML models for predictive analytics, performing operational analytics, and various other data-driven applications.

Conclusion

This data pipeline demonstrates a architecture for real-time data processing. By leveraging tools like Python, MySQL, Debezium, Kafka, ClickHouse, and various BI tools, organizations can ensure that they have timely and accurate data for decision-making and analytics. The flexibility of this setup make it suitable for a wide range of use cases, from operational monitoring to analytics etc.,